PySpark: Filtering Array Columns

2024-01-11

Gabriel MontpetitI have been looking online for a way to filter PySpark array columns based on other columns and I could only find examples where the filter used a static condition.

Here is how it is possible to filter array columns and include other columns in the predicate.

Create The Spark Session

import pyspark.sql.functions as F

from pyspark.sql import SparkSession

spark = (

SparkSession

.builder

.appName("filtering_numbers")

.master("local[2]")

.getOrCreate()

)

Create The dataframe

# create initial dataframe

df = spark.createDataFrame([

{"name": "Haskell", "preferred_number": 5, "available_numbers": [1, 6, 100, 33, 44, 99]},

{"name": "Odersky", "preferred_number": 2, "available_numbers": [1, 6, 100, 33, 44, 99]},

])

Apply The filtering

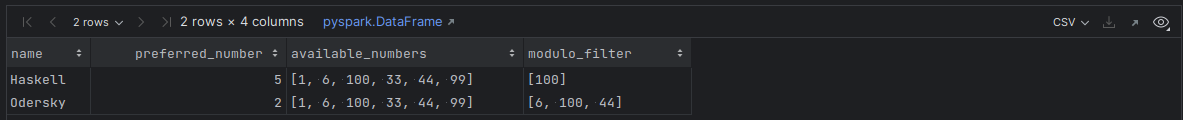

In this example, we want to filter the available_numbers with a predicate that check if available_number MOD preferred_number == 0

# filter the available numbers based on the person's preferred number

(

df

.withColumn("modulo_filter", F.filter("available_numbers", lambda x: (x % F.col("preferred_number") == 0)))

.show(truncate=False)

)